Adding Databricks Data Warehouses

A Databricks data warehouse contains the tables and views that you want to import as fact datasets and dimension datasets into AtScale models. It also contains aggregate table instances that either you or the AtScale engine creates in a database that you specify.

To configure a Databricks data warehouse, you must have a special AtScale license. Ask AtScale Support about obtaining a Databricks-enabled license.

Prerequisites

For AtScale:

- You must have the

datawarehouses_adminordatawarehouses_managerealm role assigned in the Identity Broker. - You must know the schema to use for building aggregate tables in the data warehouse. AtScale reads and writes aggregate data to this schema, so the AtScale service account user must have ALL privileges for it. BI tool user accounts should not have the select permission.

For Databricks:

- Use a supported version of Databricks. For more information, see Supported Tools and Platforms.

- When using Databricks SQL or Unity Catalog, make sure they are configured as needed. For details, see Databricks SQL guide and What is Unity Catalog.

- Since Databricks does not support JDBC authentication with username and password, you have to generate a personal access token. Databricks recommends using access tokens belonging to service principals; for more information about obtaining them, see Manage service principals. When creating the Databricks connection (described below), use the token as the password, and

tokenas the username.

Prerequisites for enabling impersonation

AtScale supports impersonation for Databricks data warehouses. To configure impersonation, you must use Microsoft Entra ID with OpenID Connect as your IdP. For instructions on configuring this in the Identity Broker, see Configuring Microsoft Entra ID With OpenID Connect.

You must also do the following:

-

Ensure that your Databricks instance is instantiated in Entra ID.

-

In Azure:

- Open the application for the AtScale Identity Broker.

- On the Overview page, click View API Permissions, then click Add a permission.

- In the Request API permissions pane, go to the APIs my organization uses tab, then search for and select AzureDatabricks.

- Select the user_impersonation checkbox.

- Click Add permissions.

- In the sidebar, under Manage, click Expose an API.

- In the Application ID URI field, copy the ID for the AtScale application. This should be everything after

api://. Make a note of this, as you will need it later to configure the Identity Broker.

-

In the Identity Broker:

-

In the sidebar, select Identity providers, then open your Entra ID IdP for editing.

-

Open the Advanced dropdown and enable the Disable user info option.

-

In the Scopes field, enter the AtScale application ID you obtained from Entra ID above, with

/.defaultadded to the end:<application_ID>/.defaultNoteIf you have multiple APIs defined in Entra ID, you may need to include

api://before the application ID:api://<application_ID>/.default -

Go to the Mappers tab and click Add mapper.

-

Define an attribute mapper to one of your Entra ID attributes that includes all users that require access to your Databricks data warehouse.

-

In the Role field, select the

query_userandimpersonation_userroles. -

Click Save.

-

-

In Design Center, set the

idp.token.audienceglobal setting to2ff814a6-3304-4ab8-85cb-cd0e6f879c1d/.default. This is the Databricks resource ID default scope.

When you add your Databricks data warehouse in AtScale, be sure to enable the Enable to impersonate the client when connecting to data warehouse checkbox. For instructions, see the following section.

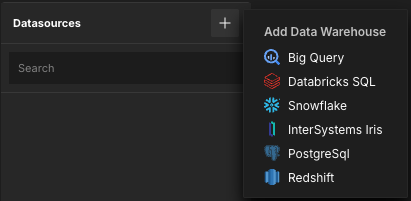

Add a data warehouse

-

In Design Center, open the Datasources panel.

-

Click the + icon and select Databricks SQL.

The Add Data Warehouse panel opens.

-

Enter a unique Name for the data warehouse. AtScale displays this name in the Design Center and uses it in log files.

-

Enter the External connection ID for the data warehouse. You can optionally enable the Override generated value? toggle to default this value to the name of the data warehouse.

-

Enter the name of the Aggregate Catalog, which AtScale uses when creating aggregate tables. You must create this; AtScale does not create one automatically. Also, be aware of the following:

- If the catalog name contains non-alphabet characters, you should surround the name with backtick characters (`).

- AtScale supports Databricks SQL clusters with both Unity Catalog enabled and disabled, and thus three-part namespace support is enabled for Databricks SQL.

-

Enter the name of the Aggregate Schema for AtScale to use when creating aggregate tables. You must create this schema; AtScale does not create one automatically.

If Unity Catalog is disabled, the aggregate schema will reside in the default catalog

hive_metastore. If Unity Catalog is enabled, it's the user's choice to decide in which Catalog-->Schema the aggregates can be stored. -

(Optional) In the Impersonation section, select the Enable to impersonate the client when connecting to data warehouse checkbox to enable impersonation. Note that this requires you to have completed the prerequisites for enabling impersonation above.

When this option is enabled, the following options appear:

- Always use Canary Queries: Determines whether canary queries are required in light of aggregate misses.

- Allow Partial Aggregate Usage: Allows mixed aggregate and raw data queries.

-

In the Access Controls section, add the users and groups that you want to be able to access the data warehouse and its data. For more information on how data warehouse security works in AtScale, see About Data Warehouse Security.

-

Add a connection to the data warehouse. You must have at least one defined to finish adding the data warehouse.

-

In the Connections section, click Connection Required. The Add Connection panel opens.

-

Complete the following fields:

- Name: The name of the connection.

- Host: The location of the Databricks server.

- Port: The port number to route transmitted data.

-

In the Authorization section:

- In the Authentication Method field, select Password.

- Enter the Username and Password AtScale uses when connecting to the data warehouse.

-

(Optional) In the Additional Settings section:

- Access your Databricks cluster and make sure the following flags are set:

transportMode,ssl,httpPath,AuthMech,UID,PWD, and (optionally)UseNativeQuery=1. - In the Extra jdbc flags field, enter all of the above flags for the connection (except

httpPath, which will be set in the next step). For example:transportMode=http;ssl=1;AuthMech=3;UID=token;PWD=<tokenID>;UseNativeQuery=1 - In the Http path field, enter the

httpPathflag. For example:httpPath=<path>

- Access your Databricks cluster and make sure the following flags are set:

-

Click Test connection to test the connection.

-

Click Add.

The connection is added to the data warehouse. You can add more connections as needed.

-

-

(Optional) If your data warehouse has multiple connections, you can configure their query mappings. This determines which types of queries are permitted per connection. A query role can be assigned to only one connection at a time.

In the Query Mapping section, select the connection you want to assign to each type of query:

- Small Interactive Queries: Small user queries. These are usually BI queries that can be optimized by AtScale aggregates or ones that involve low-cardinality dimensions.

- Large Interactive Queries: Large user queries. These are usually BI queries involving full scans of the raw fact table or ones that involve high-cardinality dimensions.

- Aggregate Build Queries: Queries made when building aggregate tables. This mapping should be assigned to a connection that has more compute power, and that is not subject to resource limitations that would prevent it from building aggregate tables.

- System Queries: AtScale system processing, such as running aggregate maintenance jobs and querying system statistics.

- Canary Queries: Only available when impersonation is enabled. Queries that run periodically to monitor system response times, workload, etc.

-

Click Save Data Warehouse.

Additional information

For additional information on what you can do with AtScale and Databricks, see the Using AtScale with Databricks Genie for Semantic Analytics at Scale video.